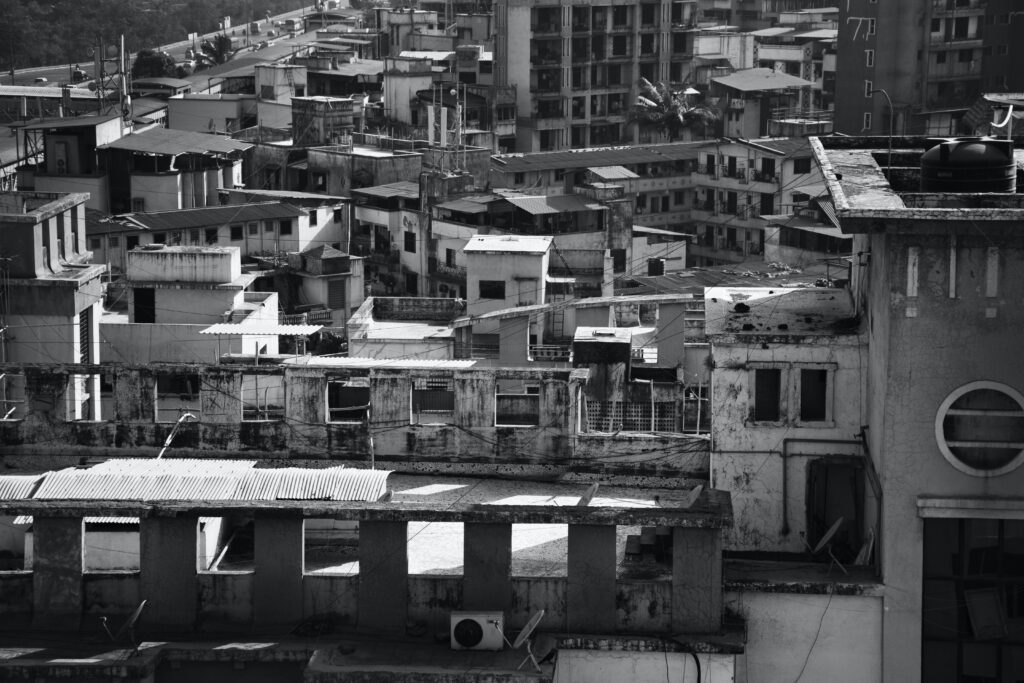

I’ve been feeling a bit stagnant in my data skills lately, so I decided to take on the #66DaysofData challenge to improve. And to start off, I picked a pretty cool project: predicting housing prices in Bangalore using a Kaggle dataset.

I found this awesome tutorial by CodeBasics that walks you through the whole process, step-by-step. I won’t bore you with all the details, but I’ll give you a quick rundown of what he did.

Data Cleaning

First up was data cleaning. Not the most exciting part, but definitely necessary. We had to go through the data with a fine-toothed comb, fixing formatting issues, getting rid of null data, and making sure all the data types matched up.

Feature Engineering

Feature Engineering was more fun, as it involved selecting and transforming variables in the dataset to improve the performance of our machine learning model. We removed columns that weren’t necessary for the model, like availability and society, and dealt with categorical data like location using OneHotEncoder which transforms each location to a new column where if True it is represented as a 1 or 0 if False.

Outlier Removal

Outlier Removal is another crucial step in data science, as outliers can distort the analysis and predictions of machine learning models. We removed outliers that didn’t make sense and could have been caused by measurement error or data entry error.

Model Building

Finally, we built our model! CodeBasics went over three different models to see which one fit best: Linear regression, Lasso, and DecisionTreeRegressor.

- Linear regression: This is probably what we all remember from High School Math and the line of best fit. It is used to find the relationship between two or more variables. It assumes that the relationship between the variables is straight or linear.

- Lasso: Lasso tries to predict a numerical value based on some input data. Lasso is particularly useful for selecting the most important features of the input data, which can help to simplify the model and make it more efficient.

- DecisionTreeRegressor is a machine learning algorithm that creates a decision tree to predict a numerical value based on input data. It’s called a tree because it branches out into different paths, similar to how a tree has branches. It can handle non-linear relationships between the input data and the predicted value.

In summary, Linear regression assumes a linear relationship between variables, Lasso is a method for feature selection, and DecisionTreeRegressor is a decision tree-based algorithm that assumes a non-linear relationship between variables.

Conclusion

So there you have it! A quick rundown of my first day of #66DaysofData. If you want to take a look at the notebook you can check it out on GitHub. Stay tuned for more!